Parameter scan

Contents

Parameter scan#

Note: the cost of running the entire notebook is larger than 1 FlexCredit.

In this notebook, we will show an example of using tidy3d to evaluate device performance over a set of many design parameters.

This example will also provide a walkthrough of Tidy3D’s Job and Batch features for managing both individual simulations and sets of simulations.

Note: as of version

1.8, the tidy3d.web.run_async function handles the same functionality as the batch, with a simpler syntax. As such, it could be a good alternative for parameter scan depending on how your script is set up.

For demonstration, we look at the splitting ratio of a directional coupler as we vary the coupling length between two waveguides. The sidewall of the waveguides is slanted, deviating from the vertical direction by sidewall_angle.

[1]:

# standard python imports

import numpy as np

import matplotlib.pyplot as plt

import os

import gdstk

# tidy3D imports

import tidy3d as td

from tidy3d import web

Setup#

First we set up some global parameters

[2]:

# wavelength / frequency

lambda0 = 1.550 # all length scales in microns

freq0 = td.constants.C_0 / lambda0

fwidth = freq0 / 10

# Permittivity of waveguide and substrate

wg_n = 3.48

sub_n = 1.45

mat_wg = td.Medium(permittivity=wg_n**2)

mat_sub = td.Medium(permittivity=sub_n**2)

# Waveguide dimensions

# Waveguide height

wg_height = 0.22

# Waveguide width

wg_width = 0.45

# Waveguide separation in the beginning/end

wg_spacing_in = 8

# Reference plane where the cross section of the device is defined

reference_plane = "bottom"

# Angle of the sidewall deviating from the vertical ones, positive values for the base larger than the top

sidewall_angle = np.pi / 6

# Total device length along propagation direction

device_length = 100

# Length of the bend region

bend_length = 16

# space between waveguide and PML

pml_spacing = 1

# resolution control: minimum number of grid cells per wavelength in each material

grid_cells_per_wvl = 16

Define waveguide bends and coupler#

Here is where we define our directional coupler shape programmatically in terms of the geometric parameters

[3]:

def tanh_interp(max_arg):

"""Interpolator for tanh with adjustable extension"""

scale = 1 / np.tanh(max_arg)

return lambda u: 0.5 * (1 + scale * np.tanh(max_arg * (u * 2 - 1)))

def make_coupler(

length,

wg_spacing_in,

wg_width,

wg_spacing_coup,

coup_length,

bend_length,

npts_bend=30,

):

"""Make an integrated coupler using the gdstk RobustPath object."""

# bend interpolator

interp = tanh_interp(3)

delta = wg_width + wg_spacing_coup - wg_spacing_in

offset = lambda u: wg_spacing_in + interp(u) * delta

coup = gdstk.RobustPath(

(-0.5 * length, 0),

(wg_width, wg_width),

wg_spacing_in,

simple_path=True,

layer=1,

datatype=[0, 1],

)

coup.segment((-0.5 * coup_length - bend_length, 0))

coup.segment(

(-0.5 * coup_length, 0),

offset=[lambda u: -0.5 * offset(u), lambda u: 0.5 * offset(u)],

)

coup.segment((0.5 * coup_length, 0))

coup.segment(

(0.5 * coup_length + bend_length, 0),

offset=[lambda u: -0.5 * offset(1 - u), lambda u: 0.5 * offset(1 - u)],

)

coup.segment((0.5 * length, 0))

return coup

Create Simulation and Submit Job#

The following function creates a tidy3d simulation object for a set of design parameters.

Note that the simulation has not been run yet, just created.

[4]:

def make_sim(coup_length, wg_spacing_coup, domain_field=False):

"""Make a simulation with a given length of the coupling region and

distance between the waveguides in that region. If ``domain_field``

is True, a 2D in-plane field monitor will be added.

"""

# Geometry must be placed in GDS cells to import into Tidy3D

coup_cell = gdstk.Cell("Coupler")

substrate = gdstk.rectangle(

(-device_length / 2, -wg_spacing_in / 2 - 10),

(device_length / 2, wg_spacing_in / 2 + 10),

layer=0,

)

coup_cell.add(substrate)

# Add the coupler to a gdstk cell

gds_coup = make_coupler(

device_length,

wg_spacing_in,

wg_width,

wg_spacing_coup,

coup_length,

bend_length,

)

coup_cell.add(gds_coup)

# Substrate

(oxide_geo,) = td.PolySlab.from_gds(

gds_cell=coup_cell,

gds_layer=0,

gds_dtype=0,

slab_bounds=(-10, 0),

reference_plane=reference_plane,

axis=2,

)

oxide = td.Structure(geometry=oxide_geo, medium=mat_sub)

# Waveguides (import all datatypes if gds_dtype not specified)

coupler1_geo, coupler2_geo = td.PolySlab.from_gds(

gds_cell=coup_cell,

gds_layer=1,

slab_bounds=(0, wg_height),

sidewall_angle=sidewall_angle,

reference_plane=reference_plane,

axis=2,

)

coupler1 = td.Structure(geometry=coupler1_geo, medium=mat_wg)

coupler2 = td.Structure(geometry=coupler2_geo, medium=mat_wg)

# Simulation size along propagation direction

sim_length = 2 + 2 * bend_length + coup_length

# Spacing between waveguides and PML

sim_size = [

sim_length,

wg_spacing_in + wg_width + 2 * pml_spacing,

wg_height + 2 * pml_spacing,

]

# source

src_pos = -sim_length / 2 + 0.5

msource = td.ModeSource(

center=[src_pos, wg_spacing_in / 2, wg_height / 2],

size=[0, 3, 2],

source_time=td.GaussianPulse(freq0=freq0, fwidth=fwidth),

direction="+",

mode_spec=td.ModeSpec(),

mode_index=0,

)

mon_in = td.ModeMonitor(

center=[(src_pos + 0.5), wg_spacing_in / 2, wg_height / 2],

size=[0, 3, 2],

freqs=[freq0],

mode_spec=td.ModeSpec(),

name="in",

)

mon_ref_bot = td.ModeMonitor(

center=[(src_pos + 0.5), -wg_spacing_in / 2, wg_height / 2],

size=[0, 3, 2],

freqs=[freq0],

mode_spec=td.ModeSpec(),

name="refect_bottom",

)

mon_top = td.ModeMonitor(

center=[-(src_pos + 0.5), wg_spacing_in / 2, wg_height / 2],

size=[0, 3, 2],

freqs=[freq0],

mode_spec=td.ModeSpec(),

name="top",

)

mon_bot = td.ModeMonitor(

center=[-(src_pos + 0.5), -wg_spacing_in / 2, wg_height / 2],

size=[0, 3, 2],

freqs=[freq0],

mode_spec=td.ModeSpec(),

name="bottom",

)

monitors = [mon_in, mon_ref_bot, mon_top, mon_bot]

if domain_field == True:

domain_monitor = td.FieldMonitor(

center=[0, 0, wg_height / 2],

size=[td.inf, td.inf, 0],

freqs=[freq0],

name="field",

)

monitors.append(domain_monitor)

# initialize the simulation

sim = td.Simulation(

size=sim_size,

grid_spec=td.GridSpec.auto(min_steps_per_wvl=grid_cells_per_wvl),

structures=[oxide, coupler1, coupler2],

sources=[msource],

monitors=monitors,

run_time=50 / fwidth,

boundary_spec=td.BoundarySpec.all_sides(boundary=td.PML()),

)

return sim

Inspect Simulation#

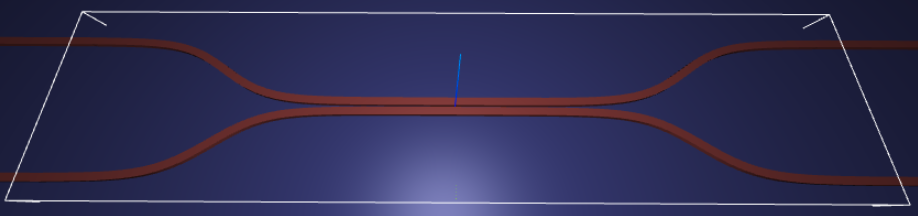

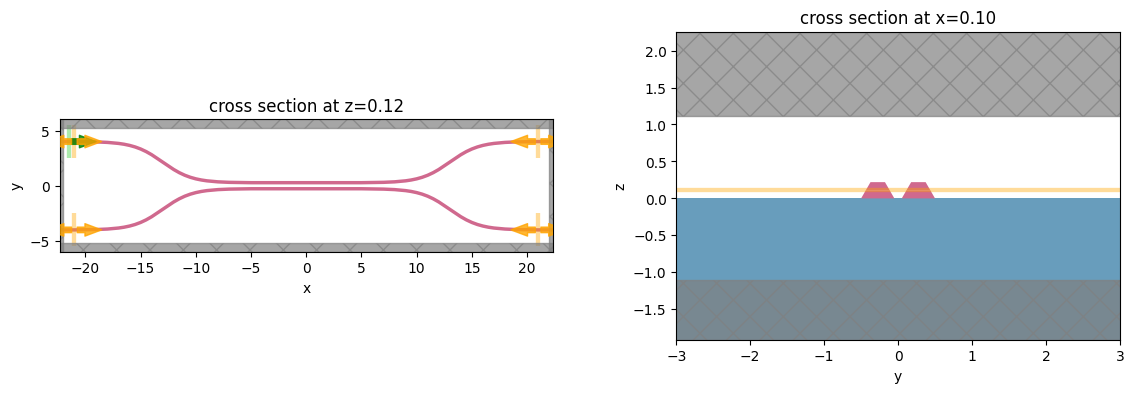

Let’s create and inspect a single simulation to make sure it was defined correctly before doing the full scan. The sidewalls of the waveguides deviate from the vertical direction by 30 degree. We also add an in-plane field monitor to have a look at the fields evolution in this one simulation. We will not use such a monitor in the batch to avoid storing unnecesarrily large amounts of data.

[5]:

# Length of the coupling region

coup_length = 10

# Waveguide separation in the coupling region

wg_spacing_coup = 0.10

sim = make_sim(coup_length, wg_spacing_coup, domain_field=True)

[6]:

# visualize geometry

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(14, 4))

sim.plot(z=wg_height / 2 + 0.01, ax=ax1)

sim.plot(x=0.1, ax=ax2)

ax2.set_xlim([-3, 3])

plt.show()

Create and Submit Job#

The Job object provides an interface for managing simulations.

job = Job(simulation) will create a job and upload the simulation to our server to run.

Then, one may call various methods of job to monitor progress, download results, and get information.

For more information, refer to the API reference.

[7]:

# create job, upload sim to server to begin running

job = web.Job(simulation=sim, task_name="CouplerVerify", verbose=True)

# download the results and load them into a simulation

sim_data = job.run(path="data/sim_data.hdf5")

Postprocessing#

The following function takes a completed simulation (with data loaded into it) and computes the quantities of interest.

For this case, we measure both the total transmission in the right ports and also the ratio of power between the top and bottom ports.

[8]:

def measure_transmission(sim_data):

"""Constructs a "row" of the scattering matrix when sourced from top left port"""

input_amp = sim_data["in"].amps.sel(direction="+")

amps = np.zeros(4, dtype=complex)

directions = ("-", "-", "+", "+")

for i, (monitor, direction) in enumerate(

zip(sim_data.simulation.monitors[:4], directions)

):

amp = sim_data[monitor.name].amps.sel(direction=direction)

amp_normalized = amp / input_amp

amps[i] = np.squeeze(amp_normalized.values)

return amps

[9]:

# monitor and test out the measure_transmission function the results of the single run

amps_arms = measure_transmission(sim_data)

print("mode amplitudes in each port:\n")

for amp, monitor in zip(amps_arms, sim_data.simulation.monitors[:-1]):

print(f'\tmonitor = "{monitor.name}"')

print(f"\tamplitude^2 = {abs(amp)**2:.2f}")

print(f"\tphase = {(np.angle(amp)):.2f} (rad)\n")

mode amplitudes in each port:

monitor = "in"

amplitude^2 = 0.00

phase = 0.78 (rad)

monitor = "refect_bottom"

amplitude^2 = 0.00

phase = -2.37 (rad)

monitor = "top"

amplitude^2 = 0.95

phase = -0.37 (rad)

monitor = "bottom"

amplitude^2 = 0.04

phase = 1.20 (rad)

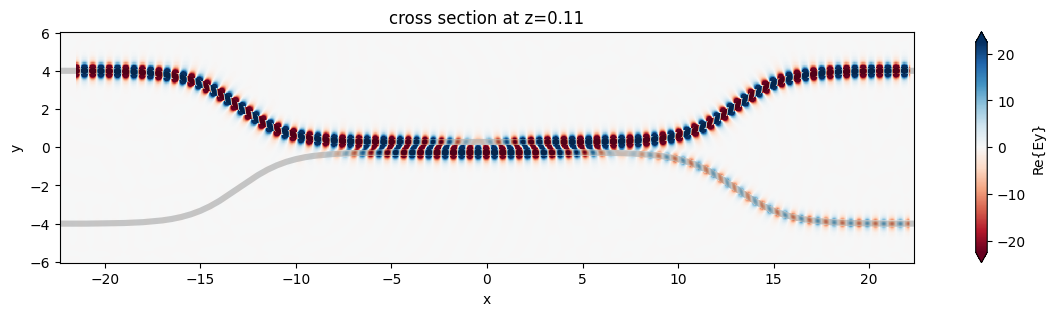

[10]:

fig, ax = plt.subplots(1, 1, figsize=(16, 3))

sim_data.plot_field("field", "Ey", z=wg_height / 2, freq=freq0, ax=ax)

plt.show()

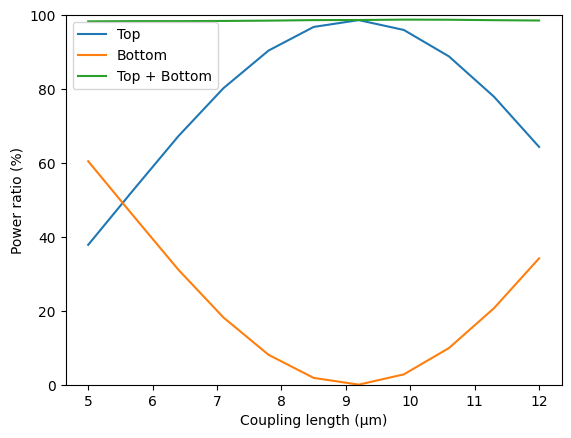

1D Parameter Scan#

Now we will scan through the coupling length parameter to see the effect on splitting ratio.

To do this, we will create a list of simulations corresponding to each parameter combination.

We will use this list to create a Batch object, which has similar functionality to Job but allows one to manage a set of jobs.

First, we create arrays to store the input and output values.

[11]:

# create variables to store parameters, simulation information, results

Nl = 11

ls = np.linspace(5, 12, Nl)

split_ratios = np.zeros(Nl)

efficiencies = np.zeros(Nl)

Create Batch#

We now create our list of simulations and use them to initialize a Batch.

For more information, refer to the API reference.

[12]:

# submit all jobs

sims = {f"l={l:.2f}": make_sim(l, wg_spacing_coup) for l in ls}

batch = web.Batch(simulations=sims, verbose=True)

Monitor Batch#

Here we can perform real-time monitoring of how many of the jobs in the batch have completed.

[13]:

batch_results = batch.run(path_dir="data")

[15:25:15] Started working on Batch. container.py:402

[15:29:36] Batch complete. container.py:436

Load and visualize Results#

Finally, we can compute the output quantities and load them into the arrays we created initally.

Then we may plot the results.

[14]:

amps_batch = []

for task_name, sim_data in batch_results.items():

amps_arms_i = np.array(measure_transmission(sim_data))

amps_batch.append(amps_arms_i)

amps_batch = np.stack(amps_batch, axis=1)

print(amps_batch.shape) # (4, Nl)

print(amps_batch)

(4, 11)

[[ 9.32660088e-03-7.68083873e-04j -4.59595367e-04+2.69994380e-03j

8.37040903e-03+8.20768240e-04j 2.37986526e-03-3.87142288e-03j

4.97633888e-03+9.84196707e-03j 6.30758817e-03-4.15473485e-03j

1.71191510e-03+3.41521925e-03j 6.82371318e-03+2.25454434e-04j

1.66651459e-03+1.52869562e-03j 7.04234980e-03+5.27028750e-04j

1.90538505e-03-2.87642868e-03j]

[-7.53013301e-03+1.51040665e-03j 3.56804163e-03-3.22005295e-03j

-2.63847220e-03-2.54007332e-03j -1.24888326e-03-9.15337392e-04j

-1.08607331e-03-8.01508908e-03j -2.07632115e-03+2.71375464e-03j

1.76179969e-03-8.09665204e-03j -4.14957609e-03+1.17583460e-03j

2.55484244e-03-2.11666514e-03j -2.59056027e-03-7.47507198e-03j

5.47408845e-04+3.76850561e-03j]

[ 4.47049937e-01+4.22592826e-01j 6.77077433e-01-2.61317135e-01j

2.84107866e-02-8.19598010e-01j -8.13541567e-01-3.74902932e-01j

-7.02605331e-01+6.40683534e-01j 3.29485337e-01+9.27071505e-01j

9.91291660e-01+6.20715656e-02j 4.60259499e-01-8.64875357e-01j

-5.90996284e-01-7.34227731e-01j -8.50786816e-01+2.35290285e-01j

-9.93543887e-02+7.95952043e-01j]

[ 5.36463307e-01-5.62986316e-01j -2.40941041e-01-6.31690878e-01j

-5.57454361e-01-2.16363298e-02j -1.80201173e-01+3.86478464e-01j

1.90802101e-01+2.11088069e-01j 1.28264763e-01-4.50723041e-02j

-9.51535635e-04+1.64265730e-02j 1.47629949e-01+7.87643978e-02j

2.45271423e-01-1.97499804e-01j -1.21816572e-01-4.38475083e-01j

-5.80485303e-01-7.11220501e-02j]]

[15]:

powers = abs(amps_batch) ** 2

power_top = powers[2]

power_bot = powers[3]

power_out = power_top + power_bot

[16]:

plt.plot(ls, 100 * power_top, label="Top")

plt.plot(ls, 100 * power_bot, label="Bottom")

plt.plot(ls, 100 * power_out, label="Top + Bottom")

plt.xlabel("Coupling length (µm)")

plt.ylabel("Power ratio (%)")

plt.ylim(0, 100)

plt.legend()

plt.show()

Final Remarks#

Batches provide some other convenient functionality for managing large numbers of jobs.

For example, one can save the batch information to file and load the batch at a later time, if needing to disconnect from the service while the jobs are running.

[17]:

# save batch metadata

batch.to_file("data/batch_data.json")

# load batch metadata into a new batch

loaded_batch = web.Batch.from_file("data/batch_data.json")

For more reference, refer to our documentation.